Developing and setting up a chatbot is relatively easy. If you don’t believe us, just Google “10 Minutes to a Chatbot” and you’ll get hundreds of step-by-step articles and even some video tutorials. The ease with which this can be done is a good indicator that the technology stack needed to host a chatbot is mature. In fact, it’s primarily the same stack as one would use for a web application. And when it comes to securing these environments, whether they’re using Node.js, PHP, Java, .NET or others, there are chatbot best practices. So assuming you have followed these chatbot best practices, it is fair to say that an individual chatbot can be as secure as a website. However, all of that said, the chatbot ecosystem still needs improvement.

What hasn’t yet received much attention is the issue of chatbot security risks that users face when engaging and interacting with chatbots. While these issues have largely been addressed for more mature platforms like websites and email, they remain new for chatbots due to the immaturity of the chatbot ecosystem.

If the three challenges presented below are not addressed, chatbots could become easy attack vectors for bad actors:

- Unconfirmed identity of the chatbot

- Serving insecure URLs that can deliver malware

- No centralized trusted authority for consumers

Let’s explore each of these in more detail.

#1: Unconfirmed Identity of the Chatbot

With websites, users trust that the domain is authentic. If the URL starts with https://www.apple.com/, users are comfortable that it is the authentic Apple website. Unfortunately, it’s not that apparent with chatbots.

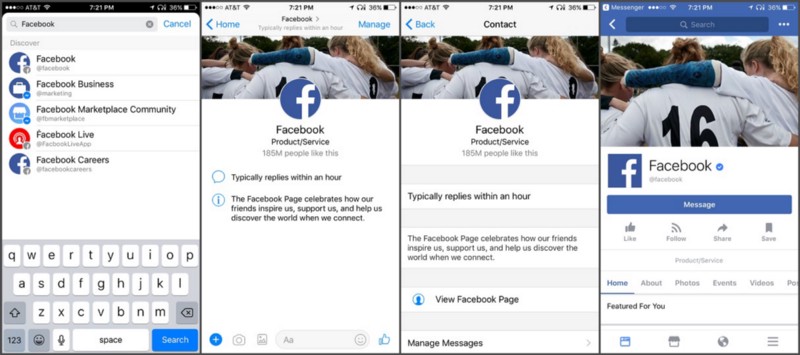

In about 15 minutes a counterfeit chatbot can be set up. And with the addition of a few simple branding elements, it can easily appear to represent a consumer’s favorite brand. Unfortunately for the consumer, it will be difficult to distinguish that it’s a counterfeit chatbot. Case in point, in preparing to meet with a large retailer, I searched to see if they were on Facebook Messenger and was surprised to find 10+ matches that looked legitimate. To verify if a particular result was legitimate, I had to:

- Click on the search result to access the conversation

- Select the name in the title bar

- Click “View Facebook Page”

- Look for a blue check mark denoting it was a verified page

To date, I haven’t found a single Facebook Messenger user that knows how to do this and most aren’t aware that Facebook has verified pages. A simple change that might help would be for Facebook to show the verified indicator in the name of the Messenger account. But at least Facebook has a defined verification process; not all popular messaging platforms have such a process.

Getting back to a counterfeit chatbot… It’s easy to imagine a consumer engaging with a chatbot thinking it represents the authentic brand. The consumer then complies when asked to enter their website account credentials to verify their identity. Now, the bad actors have everything they need to take over the unsuspecting consumer’s account.

While a counterfeit chatbot can be taken down as soon as it is reported, it’s likely the bad actors will just move the chatbot elsewhere, resulting in a game of whack-a-mole.

Here are some steps you can take to help your consumers know your chatbot is authentic:

- Verify your brand’s identity on the messaging platform if they offer it.

- Establish a page on your brand’s website with links to your chatbots and their official names. If offering SMS text messaging as a channel, publish the phone number.

- Link back to that website page from within your brand’s chatbots.

#2: Serving Malicious URLs

If a brand is not well-known, consumers face an even bigger challenge. Imagine seeing a coupon chatbot that scours the web for brands selling items that you are interested in and notifies you when it finds coupons. If that same chatbot is malicious, it can use buttons to direct you to websites serving malware. And you, the chatbot user, have no way of knowing what’s about to happen until after you click the button.

There are IT services, like MetaCert, that protect enterprise messaging systems. MetaCert works by quickly processing URLs in messages for maliciousness and/or pornography and blocks those URLs from being delivered to the enterprise user. However, consumers are mainly without options. As bot builders, we can use the MetaCert service to protect against 3rd party links that are malicious, but our users have no way of knowing or verifying that we’ve done this. It may not make it worth the gamble for users to try chatbots from brands that are not well-known.

Ultimately, this issue is best solved by the messaging platforms, similar to how Apple protects their users from malicious apps in the app store. While bad apps occasionally get through their filter, users are generally not fearful of trying new apps.

#3 : No Trusted Verification Authority

Issues #1 and #2 are chatbot security challenges because we are currently without a trusted verification authority. And while users assume that the messaging platforms are protecting them, currently they are not—at least not completely. So how can a user verify the chatbot they’re using is legitimate? How do you know they’re being protected from compromised third party web links? Unfortunately, there is currently no reliable way for a user to be certain that a chatbot is owned by the brand indicated and no trusted third party they can turn to for verification.

For external links, there’s no way for the chatbot user to verify that the provider is using a service like MetaCert. Ironically, one of the advantages for brands using chatbots is that they know the identity of the users. But the users still do not know the provider’s identity. So where does this leave us?

The wider adoption of chatbots may be slowed unless messaging platforms or an independent authority develop trust mechanisms for consumers, like those that exist for the web, email, and apps.

In the meantime, users need to treat unfamiliar chatbots as they would an email from someone they don’t know or a website that is new to them—with caution and a healthy dose of skepticism.

Chatbot Security: Summary

We are still in the early days of chatbots, and as such, probably have not attracted the full attention of the malicious actors yet. However, if and when chatbot adoption becomes more mainstream, the bad actors will definitely come. It’s up to us in the chatbot community to be proactive with solutions to these chatbot security challenges to protect our users. Adopting chatbot best practices and prioritizing security are critical steps. Let’s keep this conversation going.